At the beginning of 2022, we set out to:

1. improve the quality of questions posed to our participants throughout our Leadership and Management modules

2. standardize the formative feedback received upon answering said questions

To date, we have written a total of 2415 new questions and set up 831 individual pieces of feedback!

Below, we are happy to share with you a summary of our 2022 achievements done in collaboration with Taskbase, Sophie Hautbois, SCA trainers, and our SCA Learning & Development and AI Development Teams.

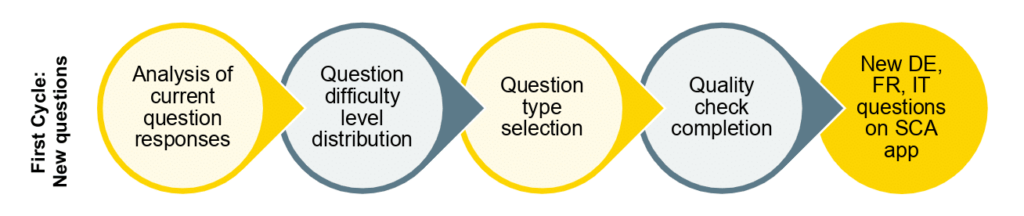

First cycle: New questions

By the end of 2022, all Leadership and Management modules at SCA have new questions, a grand total of 2415 questions in German, French, and Italian.

As a result of creating this volume of new questions, the first cycle in our process runs like a well-oiled (virtual) machine:

1. Analysis of students’ responses to current questions

- Per module, we analyze the current questions’ formulation, difficulty level, and participants’ responses

- Based on the analysis, we decide to either keep, reformulate, or discard a question

2. Distribute question difficulty levels across 3 levels

- 25% of all questions in modules are written according to Bloom’s Taxonomy on the “Remember” (Level 1)

- 35% reach the “Understand” (Level 2)

- 45% of all questions require the learners to “Apply” (Level 3)

- each question difficulty level is related to the points per question; ranging from 10 points for Level 1 to 30 points per Level 3

3. Select type based on content, write new questions, & sample solution

- The best question type is selected on the question content, ranging between:

- Multiple choice: single solution or multiple solutions

- Cloze: drop down solutions or keyword answers

- Matching: assign a term to a definition or order a process

- Open: keyword or full-sentence answer

- Each question is written based on SCA question guidelines that outlines best practices per question type

- Per question, we also include a sample solution which guides participants to the correct answer

4. Complete quality check

- After all questions and sample solutions are complete, a detailed review of the following occurs:

- Question formulation

- Question options (if exist for multiple choice questions, for example)

- Sample solution formulation

- Question difficulty level

- Question type selection

Following these steps, the first group of participants take the questions in German, French, and Italian on our SCA app.

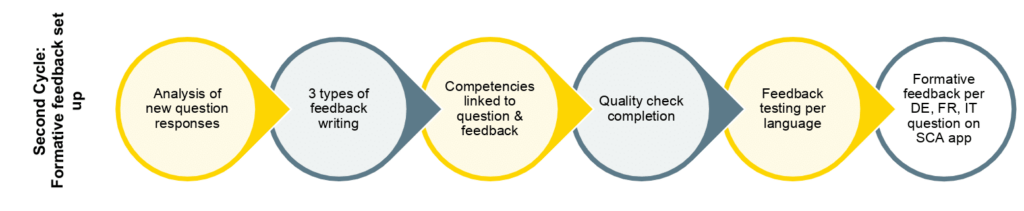

Second cycle: Set up formative feedback

Depending on a module’s schedule in 2022, we also began setting up formative feedback for some Leadership modules. Thanks to this, we have set up 831 pieces of feedback to date.

Have a look at how the first cycle of new questions informs our second cycle:

1. Analysis of participants’ responses to new questions

- We analyze the new questions’ performance based on participants’ responses

2. Write meaningful formative feedback

- Based on the students’ responses, we write 3 categories of feedback:

- Correct: confirmed the students’ answers fully met the learning objective

- Incorrect: identified a misunderstanding in participants’ answers and encouraged them to review a specific part of the learning content wherein the answer is located

- Semi-correct: clarified a concept was correctly mentioned but another key element of students’ answers was missing

- Based on the e-test or evaluation type, the formative feedback style changes

- The feedback style for the first evaluation questions differs to the e-tests’ and last evaluation’s feedback

3. Link each question and feedback category to a competency

- We ensure each learning objective in a module is addressed in the questions

4. Complete quality check

- After all feedback is complete, a detailed review of the following occurs:

- Feedback formulation per semi-correct and wrong category

- Feedback style per evaluation and e-test

- Selected learning objective per question and feedback

5. Input feedback into Taskbase and testing feedback set up

- Using the first group of students’ responses, we train the Taskbase algorithms to use the intended feedback

- Training the algorithms ensures participants get the right feedback based on their responses

Following these steps, the questions and formative feedback are ready for the second group of students.

As you can see, the two processes are closely linked, one informing the other. What’s more, we always look to the data to make informed decisions; in both cases using students’ responses as the basis for the question and feedback iterative processes. Lastly, quality checks are in place to help us provide high-quality content consistently across 3 languages.

By mid-2023, we aim to complete the second cycle for all remaining Leadership and Management modules…bringing us full-circle in both cycles!